Ever wondered when artificial intelligence started? You might think it’s a new thing, but its history goes way back. It’s a story that takes us from ancient myths to today’s tech wonders.

In the 1950s, when computers were super expensive, AI was already starting to take shape. Big names like Alan Turing and John McCarthy were setting the stage for AI’s future.

AI’s journey has had ups and downs over the years. The 1956 Dartmouth Conference coined the term “artificial intelligence.” But, there were also tough times, like the AI winters in the 1970s and 1990s. Still, the early ideas kept pushing forward, leading to big wins like IBM’s Deep Blue beating Gary Kasparov in chess in 1997.

Now, AI is everywhere, from our smart speakers to how companies predict what we’ll do next. This article will take you on a journey through AI’s history. You’ll see how its past shapes its present and future.

The Ancient Roots of Artificial Intelligence

The idea of artificial intelligence goes way back. It started with ancient civilizations, where people thought sacred statues had wisdom and feelings. These early thoughts helped shape the AI we use today.

Mythological AI: From Talos to Pygmalion

Mythological AI has been in our stories for thousands of years. Greek myths talked about Talos, a giant of bronze, and Pygmalion’s statue that came alive. These stories show how our ancestors were interested in making artificial beings.

Early Philosophical Concepts of Artificial Beings

Philosophers from the first millennium BCE thought about AI. They were from China, India, and Greece. By the 1600s, thinkers like Leibniz and Descartes talked about making rational thought systematic.

Medieval Legends and Alchemical Pursuits

In the Middle Ages, people kept trying to make artificial life. They told stories of the Golem and tried to make life from nothing. In the 13th century, Ramon Llull made machines that could think and learn, moving AI forward.

These ancient ideas show that making smart machines has been a dream for thousands of years. From myths to deep thoughts, AI’s journey is as old as humans.

The Birth of Modern AI: From Fiction to Reality

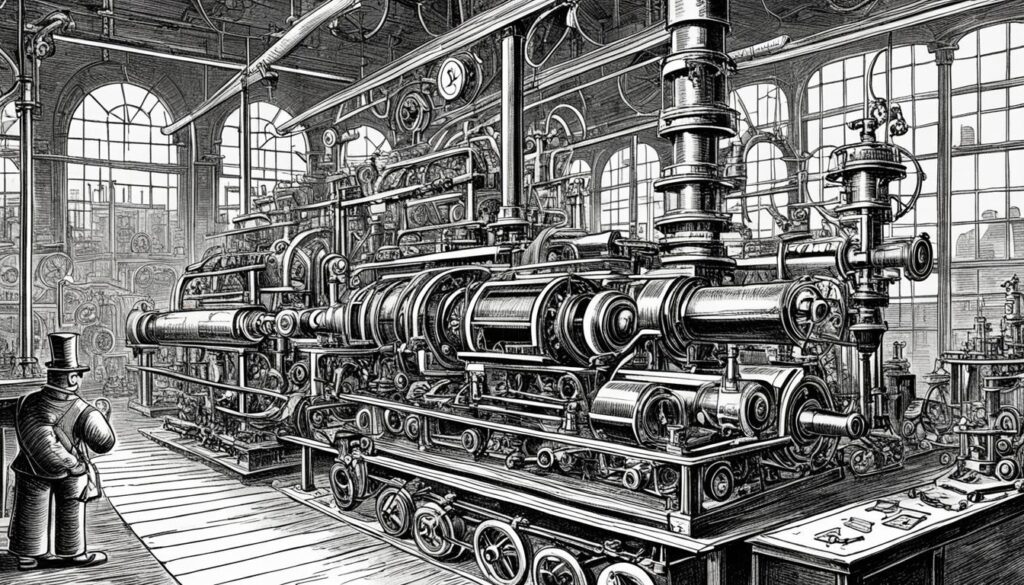

The story of modern AI started in the 19th century. Mary Shelley’s Frankenstein and Karel Čapek’s R.U.R. made people think about artificial beings. These stories helped set the stage for future AI research.

In the 1940s, everything changed. The creation of programmable digital computers made scientists dream of building an electronic brain. This move brought AI from stories to real research.

Early AI research made big steps forward. In 1950, Claude Shannon created Theseus, a remote-controlled mouse that could find its way through a maze. This showed early signs of machine learning. The term “artificial intelligence” was first used in 1956 at a Dartmouth College conference.

The Logic Theorist, made in 1955, was the first AI program. It could prove mathematical theorems using symbolic logic. This breakthrough showed AI’s potential to do complex tasks.

Now, AI is a big part of our lives. Facial recognition is in smartphones and public places. Virtual assistants like Alexa and Siri are everywhere. AI also shapes what we see online through recommendation engines.

The journey from science fiction to reality is ongoing. AI is moving into new areas like self-driving cars and creating images.

Foundations of AI: Early 20th Century Developments

The early 1900s were a big time for AI. Innovations back then helped create the AI systems we use now. Let’s look at some key events that changed the game.

Alan Turing’s Contributions to AI

Alan Turing was a big deal for AI. In 1950, he wrote a paper that changed everything. He talked about machines thinking like humans and came up with the Turing test.

This test checks if a machine can act like a human. It’s still a big deal in AI research today.

The Dartmouth Conference: Coining “Artificial Intelligence”

The Dartmouth Conference in 1956 was a big moment for AI. John McCarthy used the term “artificial intelligence” there. It was a meeting of top minds that talked about things like neural networks and natural language processing.

Logic Theorist: The First AI Program

In 1956, Allen Newell, Cliff Shaw, and Herbert Simon made the Logic Theorist. It was the first AI system and could solve math problems. It showed off at the Dartmouth Conference, proving AI’s power.

These early steps led to AI’s huge growth. From Turing’s big ideas to the Logic Theorist’s real-world use, we’ve come a long way. Now, we live in a world shaped by AI.

AI Has Been Around Longer Than You Think

The history of AI goes way back, beyond what many know. Recent breakthroughs like GPT models are big news, but AI’s roots are decades old. Early work set the stage for today’s amazing advancements.

In 1950, Claude Shannon created Theseus, an early machine that solved problems. This was a key moment for those leading the AI charge. By 1980, graphical user interfaces changed how we use computers. Microsoft’s Windows became a key player, influencing tech for 15 years.

Jump to 2022, and AI’s progress is clear. An OpenAI model did amazingly well on an AP Biology exam, scoring 59 out of 60 on multiple-choice questions. This shows how far AI has evolved from its early days.

Now, AI touches many areas of life. It’s helping tackle big health issues, aiming to cut down child deaths in poor countries. In schools, AI tools are trying to improve math skills in students. And, it’s also helping find solutions to climate change.

The story of AI is still unfolding. As technology keeps moving forward, AI will continue to change our world in ways the early pioneers dreamed of.

The Golden Age of AI: 1956-1974

The ai golden age lasted from 1956 to 1974. It was a time of great progress in artificial intelligence. This era brought a lot of hope and new discoveries that helped shape today’s AI.

Major Breakthroughs and Optimism

During this time, there were many exciting early ai breakthroughs. In 1958, John McCarthy created Lisp, a key language for AI research. Then, in 1970, the SHRDLU project showed how AI could understand natural language.

At Stanford, the Artificial Intelligence Center worked on robotics. They created Shakey, the first robot that could move on its own, from 1966 to 1972.

Government Funding and Research Initiatives

Government funding was key to moving AI forward. In 1963, DARPA gave $2 million to MIT’s Project MAC. This money helped with AI, operating systems, and computational theory research.

This support let researchers work on big projects. It helped push AI to new heights.

Challenges and Limitations

Even with the excitement, AI faced big challenges. The computers of the time couldn’t handle complex tasks easily. Understanding natural language and abstract thinking was harder than expected.

These issues led to a drop in funding. By 1974, the first AI winter started, ending the golden era of AI.

The First AI Winter: Setbacks and Funding Cuts

In 1974, the field of artificial intelligence faced a harsh reality. The promising technology hit a wall, leading to what became known as the ai winter. This period saw significant ai funding cuts and major ai research challenges that slowed progress for years.

The UK government’s Lighthill report in 1973 dealt a heavy blow to AI. It criticized the field for failing to meet its lofty goals, despite substantial investments. As a result, the UK dismantled most of its AI research programs. This decision rippled across Europe and even impacted the United States.

DARPA, a key supporter of AI in the U.S., withdrew $3 million in annual grants for speech recognition research at Carnegie Mellon University. The agency shifted its focus to more practical AI projects, moving away from fundamental research. This change in direction left many scientists struggling to secure funding for their work.

The ai winter wasn’t just about money. It also highlighted technical limitations. Researchers faced the combinatorial explosion problem, where the number of possibilities in AI systems grew exponentially. This challenge, combined with limited computational power, made progress slow and difficult.

Despite these setbacks, the ai winter allowed for a reassessment of goals. It forced researchers to rethink their approaches and set more realistic expectations. This period of reflection would eventually pave the way for future breakthroughs in artificial intelligence.

AI Renaissance: The 1980s Resurgence

The 1980s brought a big change to artificial intelligence, making it exciting again. After a slow period, this decade saw big steps forward in AI research.

Expert Systems and Their Impact

Expert systems changed the game back then. They worked like humans, solving complex problems with rules. They were used in many areas, like medicine and finance.

Japan’s Fifth Generation Computer Project

In 1982, Japan started the Fifth Generation Computer Project with $400 million. It aimed to make computers use artificial intelligence. Even though it didn’t fully succeed, it inspired many AI researchers around the world.

Neural Networks and Machine Learning Advancements

The 1980s brought back interest in neural networks. These AI systems could learn from data and get better over time. This led to the development of modern machine learning. John Hopfield and David Rumelhart made deep learning popular, setting the stage for future AI discoveries.

This time of growth laid the foundation for today’s AI advancements. It showed AI could solve real-world problems and brought back excitement in the field.

Milestones in AI: Late 20th Century Achievements

The late 20th century was a big time for AI. In 1997, IBM’s Deep Blue beat world chess champion Gary Kasparov. This win showed AI’s growing skills in making complex decisions and planning strategies.

Speech recognition software started showing up on Windows back then. This brought AI into our daily lives, letting us talk to our devices with voice commands. It opened the door for more work in understanding and processing natural language.

Then, Kismet, an expressive robotic head made by Cynthia Breazeal at MIT, came along. Kismet could recognize and show emotions, which was a big step forward in how humans and robots talk to each other. This showed AI’s potential to communicate in more human-like ways.

These achievements in the late 20th century set the stage for what was to come. They showed AI could do special tasks, talk to humans naturally, and act like us in some ways. As we entered the 21st century, these early wins made way for even faster growth in AI.

The 21st Century AI Boom: Big Data and Deep Learning

The 21st century has brought a big change to artificial intelligence. Big data and deep learning have made AI soar. This growth comes from better computers and lots of data.

Moore’s Law and Computational Power

Moore’s Law has been key to AI’s growth. Training models like GPT-3 has gotten ten times cheaper since 2020. This makes AI cheaper and more accessible for everyone.

Breakthroughs in Natural Language Processing

Natural language processing has made huge strides. Now, big language models can guess people’s mental states with 95% accuracy. This has led to more advanced AI uses across industries.

AI in Industry and Everyday Life

AI is now a big part of our lives. We see it in virtual assistants and self-driving cars. Over half of companies use AI in some way. Experts think AI could add $15 trillion to the world economy by 2030.

The AI boom is still going strong. As deep learning gets better, we’ll see more new AI uses. This fast progress is changing how we work and live with technology.

Current State and Future Prospects of AI

AI has made great strides, offering both thrilling and tough challenges ahead. Now, 42% of big companies use AI, and 40% are thinking about it. Generative AI is getting popular too, with 38% of groups already using it and 42% planning to.

AI is changing many fields. In healthcare, it helps spot diseases quicker and more accurately. Banks use it to fight fraud and make investment choices. Schools benefit with tools that detect plagiarism and tailor learning to each student. The transport sector is preparing for big changes with self-driving cars and AI travel guides.

The future of AI looks promising but also brings challenges. Experts say 44% of workers’ skills will be affected by AI from 2023 to 2028. This could lead to job losses in some areas but also create new ones. Yet, AI’s energy use might increase carbon emissions by 80%, which is a big environmental issue.

As AI grows, talking about ethics and policies is more vital. The fast pace of AI makes us wonder about its effects on society, jobs, and how we interact with AI in the future. Finding a balance between AI’s benefits and risks is key to making a future where AI helps us, not replaces us.